|

Zsuzsa Cziracky Londe

James Valentine

Robert A. Filback |

Universities often provide financial support for their

international graduate students by offering teaching assistantships upon

admission. Low English oral proficiency could negate such an option. A

pre-admission online video interview would allow departments to screen

international applicants rather than rely on TOEFL scores alone or no

oral-language-skill information at all.

Each year about 672,000 international students (IIENetwork,

2009) gain admission to U.S. universities. These international

undergraduate and graduate students are vital to a university’s

diversity but many require financial support. For graduate students,

teaching assistant (TA) positions are an advantageous source for the

needed financial support because the students also gain teaching

experience in the process. The university also benefits from being able

to fill needed TA positions.

When international students send their application packets to

U.S. universities, their English proficiency can only be assumed through

the TOEFL scores; furthermore, some universities, such as the

University of Southern California (USC), do not even require TOEFL

scores from applicants. Because departments need to make budgetary

decisions before admitting a student, which includes offering graduate

support, it is vital for them to know whether a student is prepared to

be a TA according to the university’s language proficiency requirements.

In our presentation, an online video-interview assessment tool

was described, which provides departments with a preliminary language

evaluation before admission. On the basis of our experience at USC, the

university with the highest international student population in the

country (7200 students in 2011-2012, USC Facts and Figures), we provided

contextual background for and origins of the need for an online test,

practical and theoretical considerations regarding test design and

implementation, and technical advice regarding current videoconferencing

technologies.

BACKGROUND AND CURRENT PRACTICES

At USC we have developed a face-to-face oral interview for

ITAs, which we have improved over the years. We administer this test two

times during a semester, at the beginning and end of the fall, spring,

and summer semesters. The procedure is as follows:

- Potential ITAs receive two terms/concepts related to their

academic field at random 24 hours prior to testing. At our request,

departments have provided a list with the 100 most relevant terms in a

discipline that graduate students should be able to explain. These terms

are available on our Web site by department or discipline.

- On the day of the exam, students spend about 15 minutes with

two testers (both are faculty members from the American Language

Institute). Students are first asked about their background, study or

research of interest, and plans (5-8 minutes). Then they are asked to

assume the role of a teaching assistant in a classroom of undergraduate

students and to explain their chosen term for the class using the

blackboard. The testers ask questions during the presentation.

- After the student leaves, testers grade on pronunciation (P),

language/grammar (L), and discourse/presentation skills (D) on a scale

of 1-7, with 7 being nativelike proficiency.

- There are three possible outcomes:

- Student is cleared to teach – no ESL language support necessary

- Student is cleared to teach – ESL language support is necessary

- Student is not cleared to teach

The number of those who are not cleared to teach is small, but

in the case of budgetary planning and student support, it could be

meaningful. A pre-admission online interview before students arrive to

campus could be a useful tool. It is important, however, to keep testing

principles in mind when using this tool.

ASSESSMENT PRINCIPLES WHEN USING AN ONLINE ORAL TEST

We set out to answer two questions:

- Is the online test the same test as the in-person test? (same

test task, different delivery channel; does it have the same reliability

and validity?)

- Is it fair to use both the online and in-person tests interchangeably?

To answer these questions we used two second language

assessment frameworks by Lyle Bachman and Adrien Palmer (1996, 2010):

the “qualities of test usefulness” and the “assessment use argument.”

|

TEST USEFULNESS = Reliability + Validity + Authenticity + Interactiveness +

Impact + Practicality

|

By analyzing each of the factors of test usefulness we have

come to the conclusion that without research comparing the performance

and grading consistencies of the online and in-person oral exams we can

provide only a noncommittal evaluation about the student (i.e., whether

he or she will be allowed to teach after the online test), and that the

student, if matriculated, would have to participate in the regular ITA

exam upon arrival to the university. The answer, therefore, to the first

question is: Without a study, we cannot assume the tests to be formally

equal.

Accountability is essential in the process of designing,

administering, and evaluating tests, especially in the case of a

high-stakes test such as the ITA oral exam. Bachman and Palmer’s (2010)

assessment use argument provides a useful framework to guide test

designers through the process of collecting evidence in support of the

test. Analyzing the two tests (online vs. lin-person) using these guidelines,

we have come to the conclusion that it would not be fair (until studies

are conducted) to allow some students to take the online tests while

others take the in-person test because we do not yet understand the possible

effects of online video testing and the effects of online rating. The

answer to the second question, is it fair to use both the online and the in-person test interchangeably, is also no; the two tests should not be used

interchangeably until the similarities and differences have been

identified.

It is because of these reasons that we have decided to advise

departments that our assessment will be preliminary only, and that we

reserve the right to change even our preliminary opinion upon a student’s

in-person exam performance. In addition, because we do not know the

comparative test qualities of the two tests, we are recommending not

having similar test tasks, thereby emphasizing the difference between a

preliminary test and actual test.

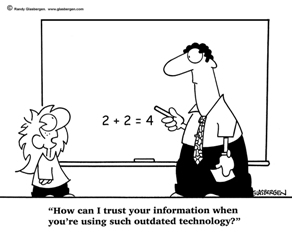

We are planning to conduct a comparison study and look forward

to sharing the results. In the meantime, it is important to remember

that we cannot let technology (or the students’ request) lead our test

design before justifying every detail of the assessment process.

APPENDIX

The following is a list of current

technologies that could be useful for online oral testing:

|

Free video-conferencing |

Skype |

Established, simple download; Skype Premium allows group videoconference |

|

Google Video-chat |

Simple to use; lower resolution; requires Gmail account |

|

Google+

Hangout |

Still working bugs out but lots of promise including

group video-chat and multimedia sharing |

|

OpenTok |

Basic level is free - API and (beta) plug-and-play apps |

|

Ways to record video-conferences |

Skype Recorders |

Several tools available to record Skype sessions and

enable split 50/50 video-images; $20-30ish |

|

iShowU |

Screen recording – download about $20 |

|

Jing |

Free up to 5 min. recording |

|

Screenr |

Free for recordings up to 5 min.; simple to use, no

download required, recordings hosted online |

|

Camtasia |

Screen capture software |

|

I Show You |

Screen capture software |

|

Live virtual classrooms |

Bb Collaborate |

Blackboard’s incorporation of Elluminate and Wimba |

|

Adobe Connect |

What MAT and other 2tor partners are using |

|

WebEx |

Been around for some time |

|

Go To Meeting |

Virtual meeting rooms monthly license cost |

|

Proctoring |

Proctor U |

Two of many established and emerging online proctoring services |

|

ProctorCam |

REFERENCES

.

Bachman, L. E., & Palmer, A. S. (1996). Language testing in practice. Oxford, England: Oxford

University Press.

Bachman, L. E., & Palmer, A. S. (2010). Language assessment in practice. Oxford, England:

Oxford University Press.

IIENetwork. (2009). Open Doors. U.S. Students Studying Abroad

and Open Doors 2009: International Students in the United States.

Zsuzsa Londe is the ITA testing coordinator at the

University of Southern California, and she teaches academic writing at

the American Language Institute. Languages, language teaching, research,

assessment, and statistics are her ongoing academic interests.

Jim Valentine is the director of the American Language

Institute at the University of Southern California. His principal

applied research interests are human motivation, the instructional

design of language programs, organizational psychology, and educational

anthropology.

Rob Filback is associate professor of clinical

education and faculty lead for the master of arts in Teaching English to

Speakers of Other Languages (MAT TESOL). His current research focus is

on issues in international teacher education and program innovations,

including online learning. |